Console #89 -- Gooey, jc, and deep-email-validator

An interview with Kelly of jc

Sponsorship

Trainual

Trainual is seeking Senior Software Engineers to help tackle tough problems, write clean code, and build amazing tech that transforms how small businesses onboard, train, and scale their teams. The Trainual HQ is in Scottsdale, AZ but this full-time, full-stack position is open to remote applicants too. You're right for the role if you're a great communicator and collaborator, a hands-on team player who gets things done, and can work in Ruby on Rails, React, Postgres, JS, CoffeeScript, HAML, and SCSS.

Not subscribed to Console? Subscribe now to get a list of new open-source projects curated by an Amazon engineer in your email every week.

Already subscribed? Why not spread the word by forwarding Console to the best engineer you know?

Projects

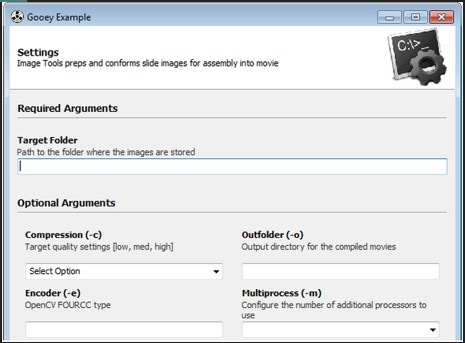

Gooey

Gooey transforms (almost) any Python command-line applications into user-friendly GUIs

language: Python, stars: 15231, watchers: 278, forks: 817, issues: 96

last commit: December 02, 2021, first commit: January 02, 2014

social: chriskiehl.com

repo: github.com/chriskiehl/Gooey

jc

jc is a CLI tool and Python library that converts the output of popular command-line tools and file-types to JSON or Dictionaries. This allows piping of output to tools like jq and simplifying automation scripts.

language: Python, stars: 2771, watchers: 23, forks: 59, issues: 3

last commit: January 03, 2022, first commit: October 15, 2019

social: blog.kellybrazil.com/

repo: github.com/kellyjonbrazil/jc

deep-email-validator

deep email validator validates email addresses based on regex, common typos, disposable email blacklists, DNS records, and SMTP server response.

language: TypeScript, stars: 512, watchers: 9, forks: 44, issues: 11

last commit: December 21, 2021, first commit: March 07, 2020

social: twitter.com/da_adler

repo: github.com/mfbx9da4/deep-email-validator

An Interview With Kelly of jc

Hey Kelly! Thanks for joining us! Let’s start with your background. Where have you worked in the past, where are you from, how did you learn how to program, and what languages or frameworks do you like?

I had an early interest in computers and programming - my Aunt gave me a Commodore 64 when I was seven or eight and I was captivated with programming in BASIC. My mom was a teacher’s aide at my grammar school and I would hang out in her room after school playing with the Apple II computers there. Computers really captured my imagination, though I didn’t know anyone else that knew anything about them, so I learned on my own.

I’ve been in the IT and network security space for over 20 years now. I started my IT career back in the mid-90’s after high-school as the IT guy for Corn Nuts snack food. I cut my teeth on Token Ring, Novell Netware, AS-400’s, Windows 3.11 running on diskless workstations, and started hearing about this thing called Linux. After a few years I moved to the sales side as a Systems Engineer at UUNET and became a router jockey focusing on BGP, VPNs, and Firewalls, but also was fascinated with UNIX OS’s like Solaris, FreeBSD, and Linux. In the 2000’s I worked at Juniper Networks and Palo Alto Networks, focusing even more on the security-side of the house. After Palo Alto Networks I helped bootstrap a few startups and I’m now at Fortinet leading a Solutions Architect team.

It was really at the startups that I rediscovered my passion for programming. Since moving into management I had lost some of my technical edge, but at startups you need to wear a lot of hats and I found that I could add a lot of value by building scripts to automate tasks for my team. I started in BASH writing some log parsers and a nice interactive configuration script for a hardware appliance I had built for our product. Then one of the SEs on my team created a cool Python script that integrated our product with AWS, and that really inspired me to learn Python.

Of all of these positions, which one was your favorite and why?

Maybe it's just remembering the good-old-days with rose-colored glasses, but I remember feeling so proud and happy working at UUNET, the largest ISP in the world at the time and the excitement of working with so many large customers designing networks. I was one of the youngest Systems Engineers, if not the youngest at the time, and it was such a great experience to learn from the best of the best. I gained a lot of confidence presenting in front of customers and performing training sessions for my peers. Good times!

What’s your most controversial programming opinion?

I wrote a blog post in 2019 about the state of the UNIX Philosophy and how I believe it should be modernized. (https://blog.kellybrazil.com/2019/11/26/bringing-the-unix-philosophy-to-the-21st-century/) The argument being that one of the coolest features of UNIX is composition - chaining multiple programs together using pipes. The problem is that there is too much variability in the text output which puts the onus on the user to parse and manipulate the unstructured output for the next stage of the pipeline. But what if command-line tools had a structured output option, like JSON? Then it would be easier to filter the data using jq or other structured data query tools before passing off to the next stage.

There has been a lot of positive and negative feedback about this article on Hacker News and Reddit. It seems a lot of people have felt the same way as me and have been hoping that more commands would allow a JSON or other structured output option for scripting, while keeping the default human-readable output for the console. The detractors typically tend to be get-off-my-lawn types who despise JSON and see it as a flavor-of-the month data format. Others think that there is no problem to solve - just use awk, sed, cut, tr, etc. to parse the data manually like we have been doing since the 70’s.

I think the structured output advocates are winning out. More and more command-line tools now have a structured output option - typically JSON. And using JSON on the command-line has become more common with tools like jq, jp, python, ruby, etc.

If you could dictate that everyone in the world should read one book, what would it be?

In my early 20’s I read a few books by Carl Sagan and could not get enough of them. All of his books are great and deal with science, philosophy, psychology, history, and humanity. I believe one in particular is so relevant today. His book Demon-Haunted World sadly predicted the internet age of misinformation that was just a trickle at the time the book was written. It talks about why people believe falsehoods, but even more importantly, how to learn logic, logical fallacies, understand the scientific method, and build critical thinking skills to combat misinformation. Two aphorisms of his that I really take to heart are: 1) “Extraordinary claims require extraordinary evidence”, and 2) “Have an open mind, but not so open that your brain falls out”.

If you could teach every 12 year old in the world one thing, what would it be and why?

Logic and how to identify logical fallacies. Critical thinking skills are not adequately taught in our education system and we are seeing that democracy cannot function in a society so easily swayed by rhetorical tricks and misinformation. These skills are not only good for humanity in general, but are also very valuable to the individual so they don’t fall into scams and grift schemes that are so prevalent today.

If I gave you $100 million to invest in one thing right now, where would you put it?

There’s more than enough crazy VC money going around these days. If there is a Pitch Deck and a Pulse, it’s already getting funded. But if I had to choose I’d say anything related to clean energy and climate change mitigation. Maybe new battery tech which will help us move to clean energy sources more quickly?

That’s probably the most important issue of our time, in my opinion. I want my children to not only be able to survive on a habitable planet, but thrive and be able to enjoy nature as previous generations have. We all know by now climate change will have huge global impacts on economies, migrations, famines, disease, conflicts, etc. We are already behind and need to invest more in this area quickly.

What’s the funniest GitHub issue you’ve received?

I always love to get GitHub issues asking for a new jc parser for a command only to look at the man page and find that there is already a structured output option for that command. One, it means I don’t have to write and test a new parser. Two, I get to inform the user that their problem is already solved. And three, it’s encouraging to see the trend increase in popularity.

Why was jc started?

It was a combination of things. I was starting to get pretty proficient at Python and I was trying to think of a good project that could help me consolidate all of the tricks I was learning for future reference as well as expand my horizons. I remembered the pain of parsing command output in the past and had some ideas of how a command filter could fit in the UNIX ecosystem. I thought it would be really cool to use jq to filter the output instead of the traditional text filtering tools. I did some digging around and found others were interested in structured command output, so I started jc as a proof of concept that slowly evolved into a production utility.

Where did the name for jc come from?

I wanted the name to be short because I knew the command would be used in pipes. I also saw it as complementary to jq (JSON Query), so I thought jc (JSON Convert) would be good. But, for some reason, I never used JSON Convert in the README, so I guess it turned into JSON CLI Output Utility. At the end of the day, it was mostly about keeping the command-line as short as possible when piping. I’ve seen some people say it stands for “Jesus Christ”, as in, “Jesus Christ - where has this tool been all my life?!”, which I think is pretty funny.

Who, or what was the biggest inspiration for jc?

When I was doing more BASH scripting I felt the pain of parsing command output and I was also just learning JSON and jq. I thought it would be cool to use jq to filter command output instead of awk, sed, cut, tr, etc. So, I guess jq was the biggest inspiration for jc.

Are there any overarching goals of jc that drive design or implementation? What trade-offs have been made in jc as a consequence of these goals?

I initially envisioned jc as just a cli tool to be used in a pipeline. Early feedback from users made it clear many people just wanted to type `jc <command-name>`, which was a fun challenge. I eventually refactored how that functionality worked a couple of times with some help from contributors. I also received feedback that jc would be a great plugin to be used in Ansible, Salt, Nornir, and other automation platforms. That’s when I got more serious about also allowing jc to be used as a Python library. At that point I had to be careful about any changes to the internal API, since the code could now be imported into other projects.

Luckily I got some good feedback early in development because I initially only thought of jc as a dumb text parser and all JSON values were just text strings. But then I saw the value in having a schema with predictable types for different keys and even adding convenience fields. Having those insights early from user feedback meant I didn’t have to change the API too many times, which would have been really annoying to users.

The biggest challenge is ensuring that a new feature doesn’t break existing use-cases. I want jc to be rock-solid since it is used in automation scripts. To that end I make sure the parsers are very well tested, with over 700 tests to date. I find that writing a parser is typically easy - sometimes taking less than an hour. It takes much longer to gather enough command output samples from different command versions and Operating Systems and create good tests for the parser. I don’t want to release a parser until I feel pretty confident about it.

Another challenge was packaging. Early on in the development of jc I wasn’t shy about utilizing 3rd-party libraries. Unfortunately, though, I found that having many external dependencies can make packaging on various Operating Systems and Linux distributions challenging. I ended up vendoring some of the smaller libraries so jc could be more easily packaged on homebrew, Fedora, Debian, etc. Packaging, in general, is already a challenge, but packaging Python apps just adds to the complexity. I also used PyOxidizer (https://github.com/indygreg/PyOxidizer) to create macOS, Linux, and Windows binaries, which makes it easier for users to try out jc by running the executable anywhere on the system.

I've always wondered how things are made available via Homebrew. Could you maybe walk me through the steps involved in making jc available on Homebrew?

It's been a long time, but I remember being overwhelmed with the instructions at https://docs.brew.sh/Python-for-Formula-Authors, so I punted on it for a while. Then I thought I'd create a binary with PyOxidizer and then just create a binary package in homebrew. But I think I ended up looking up the formula for another python package like pygments (https://github.com/Homebrew/homebrew-core/blob/master/Formula/pygments.rb) and using it as a template for jc. I also used the test section of jq's formula as a template for the test section of jc's formula. Fortunately after I created the initial formula, some other maintainers seemed to take over keeping it up to date when new versions were published to PyPi.

What is the most challenging problem that’s been solved in jc, so far (code links to any particularly interesting sections are welcomed)?

Some of the solved challenges were:

Adding timezone naive and aware epoch timestamps

Since jc is used in scripts and one of the more common data elements in command output are dates, I figured it would be very convenient for the end-user if the dates were parsed and converted into something machine-readable. There are some standard ways of representing dates, but hardly any command-line apps output dates in those ways! I decided on epoch timestamp since it is a simple integer and has good library support.

But then I realized that most commands do not output the timezone information, and even if they did, there is no standard way of representing time zones. And then, even if you know the timezone, it is not trivial to convert the timestamp to be ‘aware’, so that the time would be known and consistent no matter where jc was run. And I couldn’t cheat by having jc snoop the current OS timezone because the text jc is parsing may have come from another machine.

This was a very tricky problem to solve, not only technically, but also just wrapping my brain around what the ‘right’ answer should be in different situations took a lot of research, trial, and error. It was very rewarding and I believe the solution adds value to the user so they don’t need to do the work of converting timestamps themselves. That’s really what jc is all about - solving the hard problems once so they don’t need to be solved over and over again by different people, with more and more bugs being introduced to the world.

I started with a function that parsed the text of the date string in several different formats that I found in command output and returned a dictionary of the date’s format ID, naive timestamp, and aware timestamp (if available). I later turned it into a class for ease of use in the parsers and testing. At the end of the day I could really only support the UTC time zone for aware timestamps because of all of the complexities and library support. But I think this is an acceptable limitation because most important server data will be in the UTC timezone these days. It was my first attempt at using classes in Python, but I think it turned out ok. (https://github.com/kellyjonbrazil/jc/blob/v1.17.7/jc/utils.py#L259)Adding streaming parsers (lazy loading data for efficient memory utilization)

One of the issues I found was that some commands can output huge amounts of data. For example, `ls -lR /` can output gigabytes of text. When piped to jc, it could take 30 seconds or more to get the converted output and could even use multiple gigabytes of RAM for jc to process it. I also received requests from some users to support commands that could output data indefinitely, like ping, iostat, vmstat, etc. jc would never output JSON in some of these scenarios because it would never get an EOF from the originating program.

When I first started with jc I had some thoughts about solving this using JSON Lines (aka NDJSON), but didn’t want to bother with it until users requested it. I also noticed that in the feedback to my article on Bringing the UNIX Philosophy to the 21’st century (https://blog.kellybrazil.com/2019/11/26/bringing-the-unix-philosophy-to-the-21st-century/) on Hacker News and Reddit, some people were making the mistaken argument that JSON could not be used in these situations. So I figured it was time to bite the bullet and develop “streaming” parsers. That is, parsers that will accept the command output line-by-line and process lazily so the JSON Lines output could be generated immediately.

I had to learn about how to use generators to emit the JSON lines, and also figure out how CLI users and library users could consume the data. I also found that the OS will buffer output from one program to another when piping, so I needed to add an unbuffer option for the user so they could easily troubleshoot their pipeline. I also decided that I needed to treat exceptions differently with a streaming parser because you don’t always want to break the pipeline just because a single line failed to parse for some reason. It’s really fun to play around with the program after developing a new feature to see how users can use it and what potential pitfalls they may run into. Designing the software to be as ergonomic as possible is a real challenge and I find it to be very rewarding.

To solve this problem I needed to modify the cli library in jc so it would ‘detect’ when a streaming parser was selected and branch to a different routine to pull the individual processed lines in real-time. (https://github.com/kellyjonbrazil/jc/blob/v1.17.7/jc/cli.py#L636) I also needed to create a template so other contributors could more easily create streaming parsers. (https://github.com/kellyjonbrazil/jc/blob/master/jc/parsers/foo_s.py)

I’m pleased with how it turned out. For example, now instead of using multiple gigabytes of RAM to convert the output of `ls -lR /`it only takes 10 MB and output begins immediately.Parsing tables with missing fields

On the parser front - one of my early challenges was figuring out how to parse human-readable command output. When we look at a table, we can reason about it very quickly - even when data is missing. For example, if there are blank fields, our brain tells us to ignore them because we understand the context of the table layout.

Parsing command output that had blank entries, what I call a sparse table, was a tough initial challenge. I found myself solving it more than once, so I made it into its own internal parser function that several command parsers now use.

I remember trying to wrap my brain around how to solve that problem, coding and debugging with my little 6-month old girl on my lap. That’s not an ideal coding setup, for sure! I really don’t dare to go back and refactor it at this point since it “just works” and I’m not 100% sure how at this point. (https://github.com/kellyjonbrazil/jc/blob/v1.17.7/jc/parsers/universal.py#L34) If anyone has a cleaner method they would like to contribute, I’m definitely open to it!

Are there any competitors or projects similar to jc? If so, what were they lacking that made you consider building something new?

There are other projects that have the same idea but have taken different approaches, including Powershell, some newer BASH-like shells, including NGS, Nushell, Elvish, and Oil shell. FreeBSD has a library called libxo that allows developers to output in different formats, such as XML and JSON. Also, there are some attempts to go back and add JSON output functionality to coreutils or coreutils-like tools, including junix (https://github.com/e-n-f/junix) and pyj (https://github.com/aanastasiou/pyjunix).

I believe my approach works because you don’t need to give up BASH or existing utilities to get the benefit of JSON output. It fits with the UNIX philosophy of composition and jc does its “one thing well”. That being said, there have been discussions about integrating jc with some of the next generation shells, and I think that is exciting.

What was the most surprising thing you learned while working on jc?

I was surprised at how controversial it would be. It seems like people either love or hate it. I didn’t realize there was so much antipathy towards JSON, in general. I think younger developers are used to working with JSON because it’s ubiquitous. But many in my older generation see JSON as more of a passing fad. I didn’t realize there would be so many strong emotions around a serialization format. I think it’s great, though. I love the lively discussion and I think it’s good to get people thinking in new ways. My favorite feedback is when someone says, “Why didn’t I think of this?!

What is your typical approach to debugging issues filed in the jc repo?

Early on I added some tools within jc to help with troubleshooting. These include a `-d` option for debug messages, and `-dd` will provide verbose tracebacks that include the offending lines of code. With that output I can usually spot the problem very quickly.

Also, one of the early contributors to jc added a custom parser, or plugin feature. This made it easy to make a temporary fix for the user that they could use until the fix was added to the next release of code. This also allows users to request a new parser that I can work on and have them test without needing to upgrade jc on their end. It’s been super helpful for parser development.

Jc tends to be pretty simple to troubleshoot. All I need is a sample of the command output string. Then I can echo the string in my terminal and pipe it to jc and see what the user is seeing. Then when I fix the problem, I add the user’s command output as another test so we don’t get regressions.

What is the release process like for jc?

In the early days, my focus was on ramping up the number of supported commands and file-types. I would release a new version after at least three new parsers were added or a major feature was added. Now that jc is more mature with over 80 parsers and there aren’t new features coming out as frequently I’ll typically push out a minor release when a new parser is developed.

There is no real schedule - minor releases can be released within days or months, just depending on when I have time to work on them or when contributors submit a pull request.

All releases get published to PyPi so they can be installed via pip. Homebrew will usually pick up updates the same day as I publish to PyPi. Other OS repositories can take some time to update the packages. If it’s a big enough or interesting enough change, I’ll also build new binaries and add them to the Release on GitHub. I used to maintain a packaging site, but now I only use GitHub releases for binaries, which has simplified the process.

A contributor did the initial CI/CD pipeline automation using GitHub Actions. That has been invaluable because it allows jc to be tested on various Operating Systems and Python versions on every push. This has exposed some subtle bugs that weren’t caught with local testing and I was able to quickly fix them before pushing a release. That has really helped keep the quality of jc releases high.

What's the process to make software available to PyPi for use with pip?

I followed the tutorial here: https://packaging.python.org/en/latest/tutorials/packaging-projects/. I created a couple helper scripts in my project that I use so I don't have to remember the commands to build the package files (https://github.com/kellyjonbrazil/jc/blob/master/build-package.sh) and upload to PyPi (https://github.com/kellyjonbrazil/jc/blob/master/pypi-upload.sh).

Is jc intended to eventually be monetized if it isn’t monetized already?

No - I don’t intend to monetize jc. I see this as my way to give back to the community. I have had users suggest I add a Sponsor button on GitHub, but I’m not really interested in making any money off jc. In fact, the original idea of jc is that it should not exist at all! One of the original ideas was that jc would allow people to see the benefit of using structured output on the command-line and inspire programmers to add this functionality to the older tools. That will probably not happen, though. I am encouraged by the latest trend of modernizing older tools, typically with the Rust language. That allows us to reimagine the old tools in a modern setting and structured output is something I think should be considered.

What is the best way for a new developer to contribute to jc?

This is probably true of many open source projects, but I really appreciate having a GitHub issue opened so we can discuss the feature/parser before I get a Pull Request. Also, I’m not sure if this is a strange practice, but I prefer Pull Requests to be committed against the Dev branch instead of Master. I like to keep the Master branch clean and passing tests, and really only contain production pushes. It’s usually not a problem, though. GitHub allows me to easily change the branch when the Pull Request comes in. New parsers are always welcome!

Do you have an example commit of a really simple basic parser someone could reference to get started if they wanted to create a new one and submit a PR?

Here is an example of a very simple parser for the `free` command: https://github.com/kellyjonbrazil/jc/blob/master/jc/parsers/free.py

Usually the best way to get started is to copy the parser template file (https://github.com/kellyjonbrazil/jc/blob/master/jc/parsers/foo.py) to your jc plugin directory (https://github.com/kellyjonbrazil/jc#custom-parsers). Then you can modify the file and test it just like any other parser. You will see the new template file name in `jc -h` and `jc -a` output.

What motivates you to continue contributing to jc?

I love solving problems - they are like puzzles to me. Jc and my other projects give me an outlet to solve problems using code, which I’ve loved doing since I was a kid. I’m not sure why, but it’s not a burden, and it is fun. There are some features or problems that I put off for a while until I feel I understand it well enough, or I finally have the skill to tackle them. Exercising those new skills is very satisfying.

User feedback is also a huge motivator. Knowing that I’m making someone’s job easier or I’m inspiring a new developer definitely keeps me going. I love it when people get excited about jc - it’s such a simple concept, but it generates excitement. I get emails from time-to-time from users of jc explaining how it solved a huge problem for them. You really can’t beat that.

Also, I don’t know if this is strange, but I just like looking at my code and refining it over time. It’s like poetry. You can tell ugly code by looking at it. Maybe it didn’t seem ugly when you wrote it, but when you look at it six months later it looks really bad. It’s a great way to see how far you’ve come and maybe optimize some things with some new tricks that you’ve learned over time. I’m not sure if code is ever actually finished - sometimes after the features work it’s about enhancing the beauty of the code.

Are there any other projects besides jc that you’re working on?

After getting jc going, I found some other opportunities to enhance the JSON experience at the command line. There is no shortage of JSON utilities, but the nice thing about programming is you can make things work the way you like and sometimes others agree with your approach.

I found that jq is great for simple JSON queries, but it can get tough for more complex things. I thought - why not democratize JSON processing a bit more? So I created Jello (https://github.com/kellyjonbrazil/jello), which works much like jq, but allows the user to use pure Python syntax. I think this really improves readability of the JSON filters in BASH scripts, and since so many people know Python, it seemed like a no-brainer.

After working with JSON at the command line for some time, I found that there are use-cases for displaying arrays of objects as a table in the terminal, so I created Jtbl (https://github.com/kellyjonbrazil/jtbl). The idea was to make it dead simple - just pipe the JSON to Jtbl and not need to have too many options. It should just do the right thing out of the box unlike some other utilities I found that required more manual intervention.

Both jc and Jello have Web demos that I host on Heroku, and those were a fun way for me to learn Flask. (https://jc-web-demo.herokuapp.com/) (https://jello-web-demo.herokuapp.com/)

DevOps users also requested an Ansible Plugin for jc, so I contributed to that project as well. (https://galaxy.ansible.com/community/general) In addition, I wrote some code examples for integrating jc with Salt (https://blog.kellybrazil.com/2020/09/15/parsing-command-output-in-saltstack-with-jc/) and Nornir (https://blog.kellybrazil.com/2020/12/09/parsing-command-output-in-nornir-with-jc/).

I also created an interactive TUI for Jello called Jellex (https://github.com/kellyjonbrazil/jellex), which allows you to quickly develop a Jello query and see the results as you type.

One of my first projects was a quick-and-dirty traffic and attack generator for containerized microservices called MicroSim (https://github.com/kellyjonbrazil/microsim) that I used in some Kubernetes security Blog posts. (https://blog.kellybrazil.com/2019/12/05/microservice-security-design-patterns-for-kubernetes-part-1/)

Do you have any other project ideas that you haven’t started?

I do - I keep a list of ideas that I might get to one day, but I probably won’t invest any time until there’s not much more to do with my current projects. It would be nice to be able to build a software or SaaS business some day, but I’m busy enough with my day job that I haven’t really invested any time in bootstrapping any of them.

Care to share some of those ideas with the Console readers?

:) I'll give one example - it's a proof of concept idea of a nutritional website that takes the ingredients list of product food items from the USDA database, runs them through a proprietary algorithm, and gives you a junkfood, or healthy rating, while also recommending healthier options. It includes a bar code scanner and you can save items to your "pantry" and get a "pantry report". I just recently canceled the API that was providing product pictures and pricing information, but the rest of the site is functional. I haven't updated the USDA database in a year, so your mileage may vary. :)

What is one question you would like to ask another open-source developer that I didn’t ask you?

How did you manage to get your software packaged on many different Operating Systems and distros?

I had to do some of the leg work upfront for jc, but once Fedora and Debian picked it up, then it seemed to grow. It seems difficult to find packagers (Fedora, particularly) to get things started for some of my other projects, but maybe I just need to knock on the right doors.

I’ve found that packaging is one of the more difficult issues in software development. There is really no way to know how it all works until you put your software out there and others start packaging it, or you try to package it yourself. It is very complex and you find that it’s easy to do things suboptimally or in ways that can annoy the packagers. Where do you put the man pages in your source? Some packagers want tests included, others don’t. Do you have external dependencies that are not already packaged on a platform? That’s a problem. Some dependencies are stuck on ancient versions, so you need to workaround that in your code. Or maybe allow for situations where a dependency is optional. All of these things and more I have run into over the years. I want to be a good open source citizen so I keep a good changelog to alert packagers if there are any breaking changes coming up so they are not blindsided.